SSH: Difference between revisions

| Line 406: | Line 406: | ||

command="/usr/local/bin/borg serve --restrict-to-path /home/borg/repo/",restrict ssh-ed25519 AA... |

command="/usr/local/bin/borg serve --restrict-to-path /home/borg/repo/",restrict ssh-ed25519 AA... |

||

To restrict to port forwarding only: |

|||

<source lang="bash"> |

|||

# On the client |

|||

autossh -M 0 -f -N -n -q -L 9001:localhost:9001 -L 9002:localhost:9002 server |

|||

ssh -f -N -n -q -L 9001:localhost:9001 -L 9002:localhost:9002 server |

|||

# In authorized_keys |

|||

no-pty,no-X11-forwarding,permitopen="localhost:9002",permitopen="localhost:9002",command="/bin/echo do-not-send-commands" ssh-ed25519 AAAA... |

|||

</source> |

|||

=== Deal with unreliable connnection === |

=== Deal with unreliable connnection === |

||

Revision as of 14:58, 15 December 2020

Reference

On this Wiki:

Tips

On ssh command:

ssh -F hostname # Find hostname in ~/.ssh/known_hosts (useful if HashKnowHosts enabled)

ssh -l -f ~/.ssh/known_hosts # Print fingerprint of known host keys

ssh -Lport:host:hostport hostname sleep 60 # Forward port, and exit after 60s if no connection is made

ssh -f -N -n -q -L ${BINDADDR}:port:host:hostport hostname # Idem, with 'go backg', 'no remote cmd', 'redir stdin from /dev/nul', 'quiet'

If a script is to be called as a ssh command, use 1>&2 instead of >/dev/stderr to redirect a message to stderr (if not, you'll get an error undefined /dev/stderr):

#! /bin/bash

# This script is called as an ssh command with

# ssh hostname thisscript.sh

echo "This will go to stdin"

echo "This will go to stderr" 1>&2

Install

After installing ssh (client & server), you have to create an ssh-key:

ssh-keygen

Regenerate SSH host key

The easiest is to delete all keys and reconfigure openssh package:

rm /etc/ssh/ssh_host_*

/usr/sbin/dpkg-reconfigure openssh-server

Or manually:

ssh-keygen -t dsa -N "" -f /etc/ssh/ssh_host_dsa_key

ssh-keygen -t rsa -N "" -f /etc/ssh/ssh_host_rsa_key

ssh-keygen -t ecdsa -N "" -f /etc/ssh/ssh_host_ecdsa_key

Afterwards, you must delete the old host key from known_hosts file of any client connecting to that server:

ssh-keygen -R server_hostname

Configuration

SSH can be configured through file ~/.ssh/config. See man ssh_config for more information. The format is as follows:

# Specific configuration options for host host1

Host host1

Option1 parameter

Option2 parameter

# General configuration options for all hosts.

# Options in this section applies if same option was *not already specified* in a relevant host section above.

Host *

Option1 parameter

Option2 parameter

The value to use for each option is given by the first section that matches the host specification and that provides a value for that option. So section Host * should always be at the end of the file, since any subsequent section will be ignored.

Command-Line

Remote Command Execution

SSH allows to execute any command on remote SSH host. The syntax is

ssh USER@HOST COMMAND ...

If COMMAND starts children processes, ssh only exits when all the children terminated. This is becaus these children connects to FD stdin/stdout/stderr and ssh has to keep them open.

To avoid that uses -t and -q to avoid the "closed connection" message:

ssh -qt USER@HOST COMMAND ...

In addition, to avoid ssh to kill the children on exits, the job control must be enabled in the remote shell [1] (this is disabled when bash is started in non-interactive mode, which means that process parent and children are all in the same process group, which then receives the SIGHUP signal on exit). This is done with

set -m

in the remote script.

In case of compound commands, the semi-colon may be escaped with \:

ssh -qt USER@HOST COMMAND1\; COMMAND2...

Alternatively, use quotes:

ssh -qt USER@HOST "COMMAND1; COMMAND2..."

if ssh is used within a function / script, and you want to pass along some positional parameters to the remote script that might include spaces or funny characters, use ${*@Q} or printf " %q" "$@" to escape them:

#! /bin/bash

if [ -n "$SSH_HOST" ];

exec ssh -qt $SSH_HOST "$0" ${*@Q}

fi

To execute a remote command on remote host and stay connected afterwards, use ssh -t, along with bash rcfile, like:

ssh -t SSH_HOST "bash --rcfile PATH_TO_RC_FILE"

Don't miss the quotes around the command. Bash will execute the commands in the rc file, and will open a session. Connection remains open because stdin/stdout is not closed. Option -t allows for connecting with current terminal. Without this option, there will be no terminal connection, so bash would run in batch mode (no prompt), and terminal features like tab completion or color would be missing.

Another solution is to force bash interactive mode:

ssh SSH_HOST "bash --rcfile PATH_TO_RC_FILE -i"

Since there is no terminal, bash goes by default in non-interactive mode. Interactive mode is forced with option -i, and so prompt will be printed, etc. But this is only a partial solution because there is still no terminal, ie. no color, no TAB auto-completion.

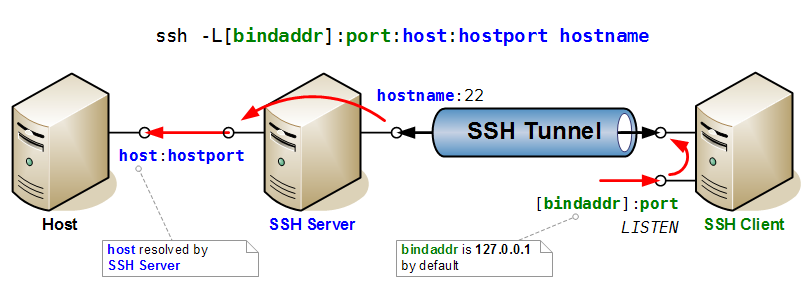

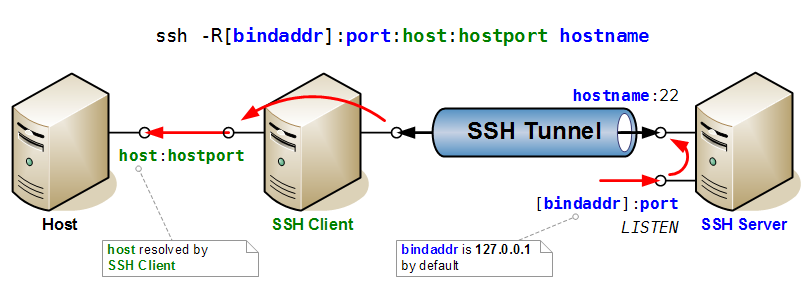

Port Forwarding with SSH

Here two drawings that illustrate very clearly the mechanisms of port forwarding in SSH.

First the case of direct port forwarding, where a port is opened for listening on the (local) SSH Client, and forwarded to the given host and port on the remote side (i.e. accessible from SSH server).

Then the case of reverse port forwarding, where a port is opened for listening on the (remote) SSH Server, and forwarded to the given host and port locally (i.e. accessible from SSH client).

Applications

SSHFS

SSH FileSystem using FUSE.

References:

Install

sudo apt-get install sshfs

sudo gpasswd -a $USER fuse

Mount / Unmount

mkdir ~/far_projects

sshfs -o idmap=user $USER@far:/projects ~/far_projects

fusermount -u ~/far_projects

The idmap=user option ensures that files owned by the remote user are owned by the local user. This is to deal with situations where these users have different UID. This option does not translate UIDs for other users.

/etc/fstab

Line to add to /etc/fstab:

sshfs#$USER@far:/projects /home/$USER/far_projects fuse defaults,idmap=user 0 0

Tips and How-To

Controlling access

By editing .ssh/authorized_keys (see man authorized_keys) one can restrict the access a client has on the remote server.

- port-forwarding connection only

- Edit authorized_keys as follows (from Stack-Overflow [2]):

no-pty,no-X11-forwarding,permitopen="localhost:6379",command="/bin/echo do-not-send-commands" ssh-rsa rsa-public-key-code-goes-here keyuser@keyhost

- Create the reverse port-forwarding with:

autossh -M 0 -f -T -N -n -q -R $PORT:localhost:22 noekeon

Reuse connection to boost performance

From http://www.debian-administration.org/article/290/Reusing_existing_OpenSSH_v4_connections:

Add the following to ~/.ssh/config:

Host * ControlPath /tmp/%r@%h:%p

Now we can connect as normal, so long as we make the first connection to any host with -M (for "Master") all subsequent connections will be much faster.

Or, force the -M for the first connection automatically with

Host * ControlMaster auto ControlPath /tmp/%r@%h:%p

This trick can be used to boost the performance of file transfer using scp (to make it similar to tar+ssh, see [3])

Connect through a Proxy

SSH can be said to establish the connection to the server through a proxy by using the option ProxyCommand. Example of ProxyCommand in the SSH config file:

# none

ProxyCommand none # No proxy

# using nc

ProxyCommand /usr/bin/nc -X connect -x 192.0.2.0:8080 %h %p # Example in ssh_config manpage but DOES NOT WORK

# using connect (aka connect-proxy)

ProxyCommand /usr/bin/connect %h %p # No proxy as well

ProxyCommand /usr/bin/connect -H proxyserver:8080 %h %p # Using HTTP proxy proxyserver:8080

ProxyCommand /usr/bin/connect -h %h %p # Using HTTP proxy defined in env. var HTTP_PROXY

ProxyCommand /usr/bin/connect -S socks5server:1080 %h %p # Using SOCKS5 proxy server socks5server:1080

ProxyCommand /usr/bin/connect -s %h %p # Using SOCKS5 proxy defined in env. var SOCKS5_SERVER

ProxyCommand /usr/bin/connect -S socks4server:1080 -4 %h %p # Using SOCKS4 proxy server socks4server:1080

# Using socat

ProxyCommand socat -ly - PROXY:proxy:%h:%p,proxyport=8080,proxyauth=user:pass

# HTTP Proxy

ProxyCommand socat -ly - SOCKS4A:socksserver:%h:%p,socksport=1080,socksuser=user

# SOCKS4A proxy (%h is resolved by proxy)

# Using ssh-tunnel

ProxyCommand /usr/local/bin/ssh-tunnel.pl -f - - %h %p

Note that connect-proxy also supports NTLM authentication (see Proxy)

If a hostname matches several sections, first match found is used. Use ProxyCommand none to override a default proxy configuration:

Host 192.*

ProxyCommand none # Otherwise setting in Host * would be taken

Host *

ProxyCommand /usr/local/bin/ssh-tunnel.pl -f - - %h %p # Default proxy settings

Dealing with Proxy Time-Out using ssh-tunnel

In some case, the proxy might wait for the client (ie. local pc) to send an authentication string as it is the case in the SSL protocol. A solution for this is described in Yobi. It consists in sending immediately the client SSH banner, and strip it when it is sent by the client. The solution described uses a custom Perl script as ProxyCommand: ssh-tunnel.pl.

- ssh-tunnel

On Ubuntu/Debian, first install the dependencies:

sudo apt-get install ssh libssl-dev libgetopt-long-descriptive-perl libmime-base64-urlsafe-perl libnet-ssleay-perl libio-socket-ssl-perl libauthen-ntlm-perl

Then install ssh-tunnel (if needed, create ~/bin first and don't forget to add ~/bin in the path in .bashrc before /usr/bin and /usr/local/bin):

tar -xvzf ssh-tunnel-2.26.tgz

make install

#Create an empty ssh banner (will be updated at the first connection)

touch ~/.ssh/clbanner.txt

#Create the links

ln -s /usr/local/bin/ssh.pl ~/bin/ssh

Edit ~/.ssh/config and ~/.ssh/proxy.conf as needed.

- Manual Perl package installation

If the Perl dependency packages are not available, they can be built manually:

First install the packages:

- ssh, libssl-dev (cygwin: openssh, openssl, openssl-devel)

Then fetch and build the Perl packages via CPAN (or consider using cpanminus):

sudo cpan

# on first run, autoconfig starts - see wiki for more details

# Also if proxy must be set, run:

# o conf init urllist

# o conf commit

reload cpan

install Getopt::Long

install MIME::Base64

install Net::SSLeay

install IO::Socket::SSL

install Authen::NTLM

- Troubleshooting

- Run in verbose debug mode:

ssh +v -v noekeon -- -d -d -d -d

- If there are no debug message, make sure that ssh-tunnel is not started in quiet mode in .ssh/config:

ProxyCommand /usr/local/bin/ssh-tunnel.pl -q -f - - %h %p # BAD - quiet mode ProxyCommand /usr/local/bin/ssh-tunnel.pl -f - - %h %p # OK

- Check that the gateway address, first field, is correct in ./.ssh/proxy.conf. Get the gateway address with

netstat -rn.

Creating a VPN over SSH

SSH allows to setup a full VPN connection over SSH, although it requires some manipulations, and is slower than regular VPN solutions using UDP packets (SSH uses TCP packets, which are slower).

- References

- References — with PermitRootLogin=no:

- [http://nick.zoic.org/art/etc/ssh_tricks/ More Trickiness With SSH

- [4] More Trickiness With SSH — Comments]

- References — with PermitRootLogin=yes:

- SSH_VPN (rules to make the process automatic)

- More references

- tun/tap on ubuntu (creating tun device using OpenVPN)

- tunctl manual pages

- ssh manual pages

- tun/tap FAQ

Preliminary Setup:

- First on the server side, ssh daemon must allow creating of tunnels. For this setup however you don't need to enable PermitRootLogin. Edit /etc/ssh/sshd_config:

+PermitTunnel yes

- Check if ip supports creation of tun/tap interfaces:

ip tuntap help

- If not, install openVPN (or a recent version of tunctl):

sudo apt-get install openvpn

- Unlike what is proposed in ssh manual, we are not going to use private addresses (10.*.*.*) but instead we are going to pick 1 address in the remote network that we hope is not used by any computer on the remote network. This is because it seems that packets with a private address range as origin are lost (no reply). Say we pick address 123.123.123.1 and 123.123.123.2

- Also check that kernel on the client supports forwarding of packets, and that the firewall authorizes it as well ([5]):

sudo su

echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -P FORWARD ACCEPT

iptables -F FORWARD

Setting up the VPN:

- Log on the server, and create the tun0 interface with ip or openvpn, and configure the interface:

sudo ip tuntap add dev tun0 mode tun user $USER # or 'sudo openvpn --mktun --dev tun0 --user $USER'

sudo ifconfig tun0 123.123.123.1 123.123.123.2 netmask 255.255.255.252 # Assign 123.123.123.2 as local IP addr on tun0 (and .1 as peer)

sudo route add -net 123.123.120.0/22 dev tun0 # This will route all packets to 123.123.120.0/24 network through

# ... our tun0 interface directly to the client.

# ... these packets will have 123.123.123.2 as source IP

- On the client, create the tun0 interface, and setup the tunnel and route table:

sudo ip tuntap add dev tun0 mode tun user $USER # or 'sudo openvpn --mktun --dev tun0 --user $USER'

sudo ifconfig tun0 123.123.123.2 123.123.123.1 netmask 255.255.255.252

sudo route add -host 123.123.123.2 dev tun0 # Route all packets for 123.123.123.2 through tun0 itf

sudo arp -Ds 123.123.123.2 eth0 pub # Tell all computers on eth0 that we'll take care of packets for IP 123.123.123.2

ssh -f -w 0:0 $SSHSERVER true # SSH in background, tunnel from tun0:tun0

Troubleshooting

- Setup tcpdump on 'tun0' on the client and server

sudo tcpdump -i tun0 -n icmp # On the client and server

- Then on the server, ping the client and verify that ICMP packets are sent back and forth:

ping 123.123.123.1 # peer on tun0 itf

- Second, we will ping a computer on the remote netwrok from the server. On the client, setup tcpdump to now monitors packet on the 'eth0' interface:

sudo tcpdump -i etho0 -n icmp # On the client

sudo tcpdump -i tun0 -n icmp # On the server

- Ping the remote computer, and observes that packet now also are forwarded by the client over the 'eth0' interface.

If packets are sent, but none are received, likely it is because the ARP proxy is not setup correctly or is not published (pub keyword witharp). If no packets are sent or received, but packets are seen on the client tun0 interface, then probably packet forwarding is not enabled on the client kernel, or firewall forbids it.

ping 123.123.120.1 # On the server

Remarks:

- SSH manpage creates tun device with different ID, but this is only needed if ssh'ing to localhost.

- Use

-w any:anyto let ssh uses any available tun devices - Using

-o Tunnel=Ethernetis mandatory for tap interfaces, or get a tunnel device open failed error. - Enabling

PermitRootLoginoffers more flexibility. Security impact can be greatly reduced by restricting root ssh access to execute a forced command (see [6] as an example)

Multiplexing SSH connection for faster connect

SSH may multiplex several connections on a single one to accelerate new connections.

This uses the config parameters ControlMaster, ControlPath and ControlPersist in ~/.ssh/config.

See Multiplexing for details ([7], [8]).

Dynamic configuration using Match

Instead of using Host keyword to group ssh configuration in ~/.ssh/config, we can use Match to build more complex configuration, and among others configurations that depend on runtime settings.

For instance, assume we have a script on-acme-network that returns true if we are on some ACME network, detecting by testing the current network gateway:

#! /bin/bash

#

# Script on-acme-network

function get_gateway()

{

if [ -x /sbin/ip ]; then

/sbin/ip route | awk '/^default/ { print $3 }' | head -n 1

else

/bin/netstat -rn | perl -lne "print for /^0\.0\.0\.0 +([\.0-9]+) /g" | head -n 1

fi

}

[ "10.10.10.10" = "$(get_gateway)" ]

Then we can create an SSH config that will connect through a proxy if we are in ACME network directly to our hosts, and connect directly otherwise:

# ~/.ssh/config

Host my_ssh_server

User some_user

HostName my_ssh_server.com

Host my_other_ssh

User some_user

HostName my_other_ssh.com

Host *

ForwardX11 no

ForwardX11Trusted no

ServerAliveInterval 15

Match exec on-acme-network

ProxyCommand connect-proxy -H proxy.acme.com:8080 %h %p

Note: another solution for dynamic configuration is to create config as a named pipe and make sure there is always a process writing to it, or use scriptfs [9].

Restrict ssh connection to a given command

This is done in authorized_keys. For instance, for borg:

command="/usr/local/bin/borg serve --restrict-to-path /home/borg/repo/",restrict ssh-ed25519 AA...

To restrict to port forwarding only:

# On the client

autossh -M 0 -f -N -n -q -L 9001:localhost:9001 -L 9002:localhost:9002 server

ssh -f -N -n -q -L 9001:localhost:9001 -L 9002:localhost:9002 server

# In authorized_keys

no-pty,no-X11-forwarding,permitopen="localhost:9002",permitopen="localhost:9002",command="/bin/echo do-not-send-commands" ssh-ed25519 AAAA...

Deal with unreliable connnection

sshd may not detect rapidly that remote client is no longer connected. As a result, whatever process launched on the server may still consume resources needlessly [10].

Edit client configuration ~/.ssh/config (or /etc/ssh/ssh_config):

Host backupserver

ServerAliveInterval 10

ServerAliveCountMax 30

Here the client will close the connection if it 30 keepalive packets were not ack'ed (ie. 300s total).

Edit server configuation, /etc/ssh/sshd_config

ClientAliveInterval 10 ClientAliveCountMax 30

Here the server will close the connection if it 30 keepalive packets were not ack'ed (ie. 300s total).

With this configuration, any dead connection will close on either side after 300s, and corresponding locked resource would be freed. For instance, one could give a client process a 600s timeout to try to connect to remote, and acquire that remote resource.

Read password from environment with ssh-agent

Say the password is in variable SSH_PWD. First we make an script that will echo that variable, echo-ssh-pwd:

#! /bin/bash

echo "$SSH_PWD"

Then make it executable

chmod a+x echo-ssh-pwd

Then in our ssh connection script:

# DISPLAY setting mandatory, /dev/null redirect mandatory, setsid optional?

DISPLAY=":0.0" SSH_ASKPASS="echo-ssh-pwd" setsid ssh-add .ssh/id_ed25519 </dev/null

# ssh ...

Troubleshooting

Slow SSH connection setup

- DNS time-out on the server

Whenever a new client connects, the server does a reverse-DNS look-up. If the client IP can't be reversed, the SSH connection is suspended until DNS request times out.

FIX: Adds client IP and name in /etc/hosts

Missing Locale in Perl

When logging in from a client with different locale from the server, perl returns a warning about missing locale:

perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

LANGUAGE = (unset),

LC_ALL = (unset),

LC_PAPER = "fr_BE.UTF-8",

...

LC_NAME = "fr_BE.UTF-8",

LANG = "en_US.UTF-8"

are supported and installed on your system.

perl: warning: Falling back to the standard locale ("C").

- This is fixed by telling sshd not to accept locale env var. from the client. Edit /etc/ssh/sshd_config:

- AcceptEnv LANG LC_*

+ AcceptEnv LANG

Weird fingerprint format (md5 vs sha256)

Since OpenSSH 6.8, the format of fingerprint switched from md5 to sha256 (in Base64). From superuser.com:

- Force display of md5 fingerprint

ssh -o FingerprintHash=md5 example.org

- Get md5 or sha256 fingerprint

ssh-keyscan example.org > key.pub # or find the keys on the server in /etc/ssh

# Get default ssh, depending on OpenSSH version

ssh-keygen -l -f key.pub (default hash, depending on OpenSSH version)

# Get md5 format

ssh-keygen -l -f key.pub -E md5

# Get sha256/base64 format

awk '{print $2}' ssh_host_rsa_key.pub | base64 -d | sha256sum -b | awk '{print $1}' | xxd -r -p | base64

ssh does not close connection when remote command exits

This is likely due to the remote command creating new processes that attach to stdin/stdout/stderr [11].

To fix:

- Redirect stdin/stdout/stderr

ssh user@host "/script/to/run < /dev/null > /tmp/mylogfile 2>&1 &"

- Use

-t

ssh -t user@host "/script/to/run"

ssh saying Connection to xxx closed after remote command execution

ssh -t user@host somecmd

# ...

# Connection to xxx closed.

The solution is simply to use -q

ssh -qt user@host somecmd

# ...